Days after Chinese AI chatbot DeepSeek shook the technology world being roughly 95% less resource-hungry than ChatGPT, questions about the viability of existing and future AI projects have arisen. A new by Bestbrokers delves into the electricity consumption of ChatGPT.

RELATED: DeepSeek-R1 AI model 11x more likely to generate harmful content, security research finds

Earlier this month, U.S. President Donald Trump presented a new AI-focused project called Stargate. It aims to bolster the AI infrastructure in the United States. Under the initiative, a total of five data centers will be developed in the country. Each of these is expected to consume massive amounts of electricity for developing and training new, advanced versions of ChatGPT. In an attempt to estimate the power demand of this gargantuan project, BestBrokers looked into the electricity consumption of OpenAI’s training of already existing models.

More power for AI models?

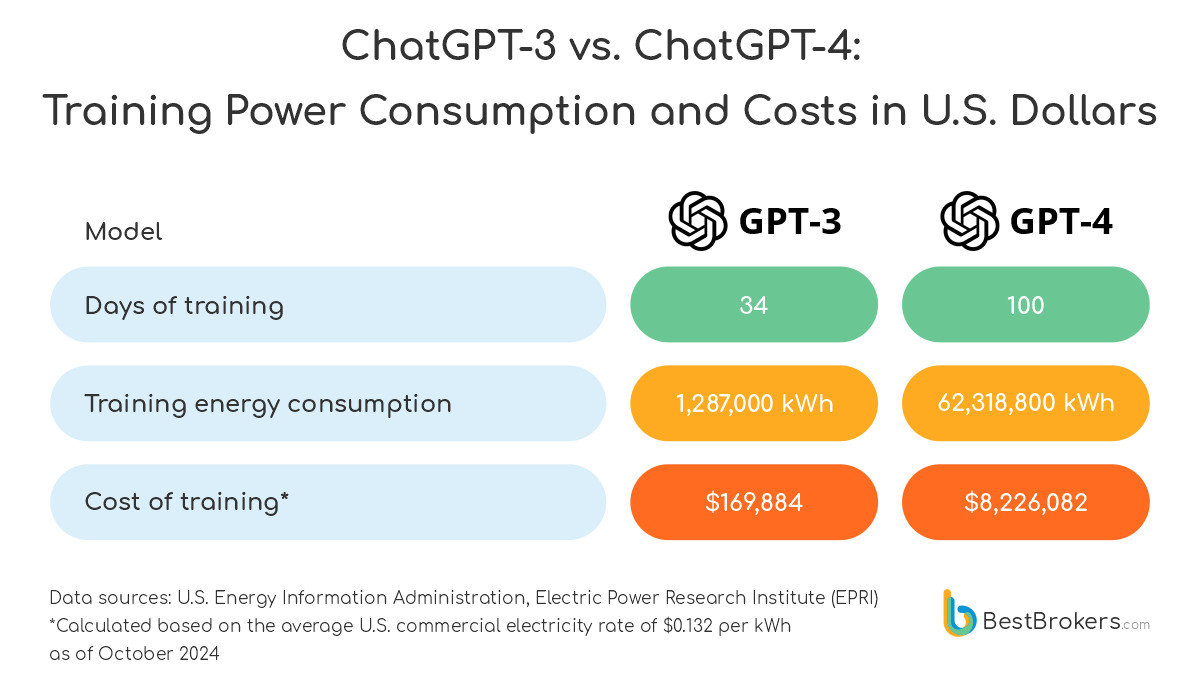

Every new version of the chatbot has required more resources, including electricity. Over the 34 days of training of ChatGPT-3, which had 175 billion parameters, 1,287 MW of power was used. The following, much more advanced model required a longer period of training that also used more resources.

Power consumption of AI models

Here is the simple calculation we did:

- ChatGPT-3 (175 billion parameters), 34 days of training consumed 1,287 MW or 37.85 MW per day

- ChatGPT-4 (1 trillion parameters), 100 days of training consumed 62,318.8 MW or 623.19 MW per day

- ChatGPT-4o – optimised for efficiency, an unknown number of parameters and architecture

This means that the power consumption of ChatGPT-4 training was 16.5 times the consumption of training ChatGPT-3. If the next iteration of the chatbot, the one presumably to be researched and trained in the upcoming Stargate facilities, is equally more power-hungry, it could require an average of 10,259.79 MW of electricity per day.

This is a massive amount of energy that would be enough to provide electricity to 353,786 U.S. households, based on an average U.S. household electricity consumption of 29 kWh per day or 10.59 MW per year. While China’s DeepSeek claims to reduce energy demand, the U.S.-based OpenAI and its chatbot require huge resources with a potentially catastrophic environmental impact.

ChatGPT and Stargate challenge

Of course, such calculations are purely speculative and based on existing data and estimates about the previous versions of ChatGPT, so take them with a grain of salt. According to Business Insider, the first Stargate data center in Abilene, Texas, will have a natural gas plant. The facility will provide up to 360.5MW of power. It will be built using 10 simple-cycle turbines supplied by Massachusetts-based GE Vernova and Caterpillar Inc.’s subsidiary Solar Turbines. Additionally, batteries and renewable energy from solar panels are planned for the facility.

More information about Stargate

The initiative led by ChatGPT maker OpenAI will be a joint venture that will also include U.S. software giant Oracle, Japan’s SoftBank, and Abu Dhabi’s investment firm MGX. Backed by a $500 billion investment over the next four years, Stargate will focus on creating advanced data centers equipped with cutting-edge technology to support AI research and applications.